Gigabytes and terabytes are so passé. It’s soon going to be a zettabyte world thanks to all the digital data—images, books, music, movies, video, documents, maps, you name it—that we collect and engage with throughout our lives.

Industry research firm IDC predicts a 50-fold increase in the total amount of digitally stored data between 2010 and 2020. This means that in the next seven years, the world's total data footprint will reach 40 zettabytes (that's 40 trillion gigabytes), and every man, woman and child on the planet will account for some 5.2 terabytes of data whether in the cloud or local storage.

That's a lot of ones and zeroes, and, unfortunately, help is not on the way in the form of some high-tech, sci-fi breakthrough. To wit: Holographic storage will not bail us out in the next seven years. Nope, between now and 2020, the heavy lifting will be done by that venerable mainstay of storage—the mechanical hard drive. And, yes, USB flash drives, SSDs (solid-state drives), optical drives, and even tape backup systems will also remain in play.

None of these technologies is poised for a revolution, but we should see some interesting evolution in various hardware areas. So let's take a peek at the incremental technology improvements that will help humankind reach that magic 40 zettabyte mark.

Hard drives: Dull but reliable

The workhorse hard drive will remain the dominant storage mechanism for the world's data into the foreseeable future. According to Gartner's John Monroe and Joseph Unsworth, in 2016 hard drives will still account for 97 percent of total drive sales, despite the penetration of SSDs into the desktop and laptop markets. Hard drives continue to lead SSDs in both capacity and competitive pricing, and will do so for the foreseeable future—this in spite of growing capacity and price reductions for flash drives.Luckily, hard drive performance and capacity will continue to see improvements in the coming years, thanks to several new technologies on the horizon. Hitachi Global Storage Technologies recently announced helium-filled drives aimed at enterprise and cloud storage. These drives promise a 40 percent increase in drive capacity and a 20 percent improvement in energy efficiency.

(See related: SSDs vs. hard drives vs. hybrids: Which storage tech is right for you?)

Helium has one-seventh the density of air. As such, the element can reduce the drag and turbulence between drive platters, which translates to more precise read/write head placement, and allows narrower tracks to be written and more disk platters to be placed inside a drive.

Hitachi hasn't commented on increases in areal density (that is, how many bits can be squeezed into a single square inch), but it has said that its new 3.5-inch helium-filled drives, scheduled for delivery sometime in 2013, will boast seven platters instead of the current five. This increase in disk platters—all thanks to helium—will give us that 40 percent capacity increase per drive.

Two technologies still under development could a deliver a tenfold or greater increase in areal density: The long promised (but yet to be commercialized) Heat Assisted Magnetic Recording (HAMR) and patterned media such as Self-Ordered Magnetic Arrays (SOMA).

HAMR uses current read/write technology in conjunction with a laser to heat the media. The heat is required to facilitate writing to disk-coating compounds such as iron/platinum alloys that are capable of greater areal density than today's compounds, but are less magnetically malleable until heated. Eight nanometer and even 3nm particle separations are envisioned. HAMR still remains in development, however, and we shouldn't expect to see anything deployed sooner than two years.

Where today's magnetic layers involve magnetic particles that can be oriented to represent data, in HAMR these particles are arranged rather chaotically. This makes them difficult to pack any tighter than they currently are. But patterned media, such as SOMA (a group of nanoparticles that can be induced to align in an ordered fashion), pack magnetic bits much tighter by eliminating the random shapes and spacing of the current technology. It all sounds great on paper, but deploying this technology en masse at an affordable price will be a challenge.

In terms of personal data storage, hybrid hard drives—which marry high-speed flash memory to traditional spinning discs—will likely make a greater impact on our lives than any fancy new technology cooked up in an R&D lab. Hybrid drives deliver the storage capacities of traditional hard drives along with some of the performance benefits of SSDs, but at only twice the price per gigabyte of standard hard drives.

Seagate is already in the hybrid drive game, and Toshiba and other drive manufacturers have recently weighed in with plans for hybrid drives. Toshiba sent samples of its 1TB and 750GB hybrid drives last fall to manufacturing partners. Toshiba expects 3 million of the hybrid drives to be produced by the end of 2014. However, unless the products can approach the tangible kick in performance delivered by SSDs, they may be relegated to being a stopgap solution.

The future of flash memory: speed and price reductions

Smartphones, tablets, USB flash drives, digital cameras, video recorders and SSDs all rely on fast, rugged, nonvolatile NAND flash memory. Gartner predicts that yearly NAND sales will reach 200 petabytes by 2016, up from just 50 petabytes in 2012. Much of the memory will go into the SSDs for servers and desktop PCs, as well as laptops, tablets, and other mobile devices.The difference in speed between an SSD and a fast hard drive is obvious even to the untrained eye. In PCWorld's December 2012 roundup, the fastest consumer SSDs read at almost 500 MBps and wrote at over 600 MBps. Meanwhile, a high-end, 10,000-rpm hard drive averaged around 200 MBps reading and writing. That's a three-fold advantage in performance, and enterprise-grade SSDs are even faster.

With NAND's limited number of write cycles rendered moot by advanced operating system support and techniques such as wear-leveling, SSD technology's only constraints are cost and capacity.

Current consumer SSDs top out at 512GB, and each gigabyte costs 10 times what a gigabyte of a platter-based hard drive costs. The prices of both storage technologies are dropping at similar rates (20 to 25 percent a year), and this 10-to-1 ratio should remain about the same for at least the next five years according to numbers Gartner shared with us.

Traditionally, NAND has suffered a limited number of Program/Erase (P/E) cycles. Each cycle reflects an erasure and write on a memory cell. Fortunately, though, the P/E problem is being rendered moot by advanced operating system support and firmware techniques such as wear-leveling, so now SSD technology's only constraints are cost and capacity.

Currently, faster and more expensive 100,000 P/E, SLC (single-layer cell, 1-bit) NAND is used in enterprise-level drives, with 10,000 P/E, MLC (multilevel cell, 2-bit) NAND used in consumer-grade drives. This is likely to remain the pattern for the near future, especially as firmware improves practical longevity even further—and it may have to, because as dies shrink, so do the P/E ratings of NAND. The promise of TLC (triple-level cell, 3-bit) NAND is often bandied about in SSD discussions, but without substantial improvement in lifespan (currently about 1000 P/E cycles) and performance (it's slow), you'll only see it in smaller mobile devices over the next few years.

The biggest challenge for NAND is physics. Greg Wong of Forward Insights says that there is most likely only one die shrink left after Intel's current 20-nanometer process. After that, decreasing endurance and performance will force a halt to further reductions in die size for flash memory. When asked by how much Intel might be able to reduce the die, Wong says he was unsure.

But as many real estate–challenged metropolitan areas prove, when you run out of horizontal space, you can always go vertical. To this end, 3D or layered NAND chips will likely provide the increase in capacity that's required for NAND to continue growing in capacity.

With a large, well-established manufacturing infrastructure, it's unlikely that we'll see serious inroads by competing nonvolatile memory technologies anytime soon. But trust us—various technologies are vying to offer an alternative, including HP's Memristor (memory resistor),Toshiba's ReRAM (resistive memory), and FeTRAM (Ferro-Electric Transistor memory), to name just a few.

Optical: On the way out?

Gartner analyst John Monroe describes CD/DVD/Blu-ray as "the cockroach of the industry." Nobody really wants the technology. Nobody is really satisfied with it. But you can't get rid of optical because it's still by far the cheapest removable media available. That said, according to a DigiTimes.com report, Robert Wong, chairman of Taiwan's largest optical disc maker, CMC Magnetics, warned that optical media prices will jump by 50 percent in the second half of 2013 due to plant closures in 2012 and an industry reshuffling. Higher prices could very well hasten the format’s decline.Moving forward, consumers will deal with optical media primarily as a physical delivery system for entertainment and software, and occasionally as emergency boot media. Most PCs still ship with optical drives, but increasingly these drives will be offered only as external USB options.

Though the industry appears stagnant, new optical technologies are still being developed. FujiFilm, for one, recently announced a 1TB disc to ship in 2015 (no pricing was available). TDK has also been showing a similar technology. If shipped with affordable media (it's supposedly easier to produce than Blu-ray), 1TB optical could keep the unloved, unwanted, but still undeniably handy optical disc around for the next decade or so.

Meanwhile, Blu-ray continues to be offered on PCs, and remains a convenient way to share maximum quality, high-definition movie content. BDXL for write-once discs is currently at 128GB of capacity. However, its drives need to become more prevalent and media needs to drop in price for BDXL to gain traction. That said, 4K video, the big trend at the 2013 CES, could give BDXL new life—and give Blu-ray new life as a whole—if the Blu-ray Disc Association adds 4K support to the format.

Data bus/Interfaces

With current SSDs bumping up against the limits of even SATA 6Gbps (as it's usually referred to), something will have to give in the near future. But the SATA III bus will remain in play for at least several more years, because it's more than adequate for hard drives, which will dominate the landscape through 2020.Several new external interface buses have the performance chops to make inroads in 2013.

Thunderbolt (nee Light Peak) from Apple and Intel already ships on newer Macs and a relatively few cutting-edge PC motherboards. It's basically an external version of PCI Express with a whopping 10Gbps transfer rate. For high-performance backup and external storage—particularly when there’s an array of drives in play—Thunderbolt should eventually supplant the slower eSATA and USB 3.0. However, USB will certainly remain as a peripheral and flash drive bus.

SATA Express (SATA 3.2)—an internal storage bus that uses the SATA software and protocol layer over the physical PCIe bus and connectors—is also on the horizon. Intended for internal SSDs, it supports current legacy and SATA/AHCI, as well as an NVMe (Non-Volatile Memory Express) mode. NVMe is an interface spec designed for SSDs. Theoretically, SATA Express's throughput is limited only by the bandwidth of the PCIe bus. With PCIe 2.0 that's 5 gigatransfers per second, and with the PCIe 3.0 found on newer Intel chipsets, that's 8 gigatransfers.

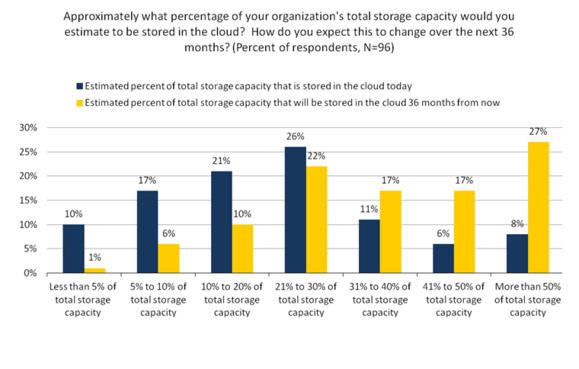

Cloud storage: Let it rain

Today about 7 percent of personal data is stored in the cloud, according to Gartner, a market research firm. But by 2016 the amount of personal data stored in the clouds will jump to 36 percent. Gartner says trends in mobility are driving the growth of "personal clouds." The rise of camera-equipped phones and tablets that automatically upload video and pictures to the cloud will create a "direct-to-cloud model, allowing users to directly store user-generated content in the cloud," Shalini Verma, principal research analyst at Gartner, wrote in a 2012 report.Terri McClure, analyst with Enterprise Strategy Group, says the more mobile gear we have, the more we'll want push data to the cloud so we can later access documents, video, and music from anywhere.

On the back end, the rise in the number of big cloud storage services such as Amazon S3, Nimbus.io, and Nirvanix is enabling smaller consumer cloud services to flourish online. For example, Amazon S3 has become a turnkey service for customers like Dropbox. It just passed its 100 millionth registered user milestone and says one billion files are stored per day on Dropbox.

For years these cloud warehouses were not held back by NAND, SSD, or HHD hardware issues. The hard part was the scalability and manageability of crushing amounts of data. Traditional hierarchic files systems were never intended to store hundreds of millions and billions of files in a single namespace. This old network file system (NFS) architecture forced data to be stored in multiple file systems, sometimes in multiple locations, and in complex directory structures.

Enter object storage, a relatively new way of storing data that organizes it into objects. Each object is made up of bytes, but also includes metadata describing the object. This allows data to be stored more efficiently and organized by metadata for easier retrieval.

Next up, the Storage Networking Industry Association is proposing a new standard called Cloud Data Management Interface. CDMI is a set of protocols defining how companies can safely move data between private and public clouds. The standard is open, allowing for wide adoption and the opportunity to associate more defined rules around object storage data, such as how long data should be retained, how many copies should be kept, and whether those copies need to be distributed geographically.

Enterprise Strategy Group

Enterprise Strategy Group Larry Phelps, larry-phelps.com

Larry Phelps, larry-phelps.comPrice Trends

While it's a given that storage will continue to drop in terms of price per gigabyte, how fast and how far are always the questions. It's impossible to factor in events such as the 2011 floods in Thailand that halted some manufacturing and significantly raised hard-drive prices. However, as bad as the floods were for the people of Thailand, they had a sobering effect on the hard-drive industry—a time-out as it were. Hard-drive prices have again dropped, but not quite back to pre-flood levels, and they are not in the free fall that they had been in.According to Gartner analysts John Monroe and Joseph Unsworth, there is "a widespread and ongoing readiness at the executive level to curtail production at any moment..." and "the HDD sector intends to closely and continuously align production with evolving demand..." After the NAND price free fall in the first two quarters of 2012, a similar resolve seems to be developing in that industry. That, however, can change in any competitive market at the drop of a hat.

According to Forward Insights analyst Greg Wong, "Steady 20 to 25 percent yearly price drops over the next few years" for NAND should be the norm. Substantiating this is Gartner's prediction of a drop from the current 85 cents per gigabyte to around 25 cents per gigabyte by 2016 for NAND. The Gartner numbers also predict a drop from the current 6 cents per GB for 3.5-inch desktop hard drives, to a mere 0.8 cents in 2016. Also, 2.5-inch mobile hard drives should drop from their current 11.5 cents per gigabyte to 3.5 cents per gigabyte.

While mainstream storage technology development is slowing in terms of density and performance, it's important to remember just how far we've come. In his 2006 presentation, "Fifty Years in Hard Drives and the Exciting Road Ahead," Seagate's Mark Kryder pointed out that if automobiles had progressed as far as hard drives in the time period from 1979 to 2006, each vehicle would carry 150,000 people, cost $15, and travel nearly a million miles an hour at 36,000 mpg. That's a heck of an improvement, and it will continue for a decade at least with the present basic technologies. After that, who knows what we wily humans have up our sleeves?

Sign up here with your email

ConversionConversion EmoticonEmoticon